TriggerDAQIntro: Difference between revisions

(link first reference to Detector Control System to the relevant page) |

|||

| Line 10: | Line 10: | ||

The Mu2e Collaboration has developed a set of requirements for the Trigger and Data Acquisition System [1]. The DAQ must monitor, select, and validate physics and calibration data from the Mu2e detector for final stewardship by the offline computing systems. The DAQ must combine information from ~500 detector data sources and apply filters to reduce the average data volume by a factor of at least 100 before it can be transferred to offline storage. | The Mu2e Collaboration has developed a set of requirements for the Trigger and Data Acquisition System [1]. The DAQ must monitor, select, and validate physics and calibration data from the Mu2e detector for final stewardship by the offline computing systems. The DAQ must combine information from ~500 detector data sources and apply filters to reduce the average data volume by a factor of at least 100 before it can be transferred to offline storage. | ||

The DAQ must also provide a timing and control network for precise synchronization and control of the data sources and readout, along with | The DAQ must also provide a timing and control network for precise synchronization and control of the data sources and readout, along with the [[Detector Control System]] (DCS) for operational control and monitoring of all Mu2e subsystems. DAQ requirements are based on the attributes listed below. | ||

<ul> | <ul> | ||

Revision as of 16:16, 7 May 2020

Introduction

The Mu2e Experiment’s trigger is responsible for determining what data is interesting because it could contain signal and therefore must be saved and what data is consistent with being background and therefore does not need to be collected. These decisions must be made within stringent time and data-flow constraints. The data acquisition system collects the data selected by the trigger and assembles the information from the various detector components so that they can be stored and analyzed by the collaboration.

Requirements

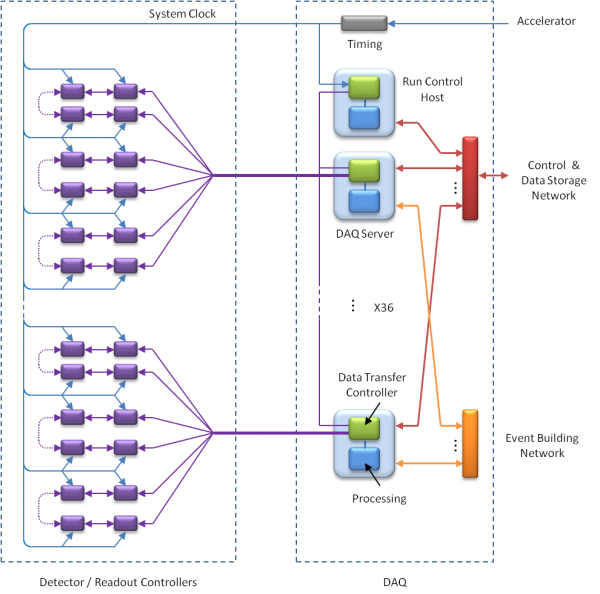

The Mu2e Trigger and Data Acquisition (DAQ) subsystem provides necessary components for the collection of digitized data from the Tracker, Calorimeter, Cosmic Ray Veto and Beam Monitoring systems, and delivery of that data to online and offline processing for analysis and storage. It is also responsible for detector synchronization, control, monitoring, and operator interfaces.

The Mu2e Collaboration has developed a set of requirements for the Trigger and Data Acquisition System [1]. The DAQ must monitor, select, and validate physics and calibration data from the Mu2e detector for final stewardship by the offline computing systems. The DAQ must combine information from ~500 detector data sources and apply filters to reduce the average data volume by a factor of at least 100 before it can be transferred to offline storage.

The DAQ must also provide a timing and control network for precise synchronization and control of the data sources and readout, along with the Detector Control System (DCS) for operational control and monitoring of all Mu2e subsystems. DAQ requirements are based on the attributes listed below.

- Beam Structure

The beam timing is shown in Figure 1. Beam is delivered to the detector during the first 492 msec of each Supercycle. During this period there are eight 54 msec spills, and each spill contains approximately 32,000 “micro-bunches”, for a total of 256,000 micro-bunches in a 1.33 second Supercycle. A micro-bunch period is 1695 ns. Readout Controllers store data from the digitizers during the “live gate”. The live gate width is programmable, but is nominally the last 1000 ns of each micro-bunch period. - Data Rate

The detector will generate an estimated 120 KBytes of zero-suppressed data per micro bunch, for an average data rate of ~70 GBytes/sec when beam is present. To reduce DAQ bandwidth requirements, this data is buffered in Readout Controller (ROC) memory during the spill period, and transmitted to the DAQ over the full Supercycle. - Detectors

The DAQ system receives data from the subdetectors listed below.- Calorimeter – 1356 crystals in 2 disks. There are 240 Readout Controllers located inside the cryostat. Each crystal is connected to two avalanche photodiodes (APDs). The readout produces approximately 25 ADC values (12 bits each) per hit.

- Cosmic Ray Veto system – 10,304 scintillating fibers connected to 18,944 Silicon Photomultipliers (SiPMs). There are 296 front-end boards (64 channels each), and 15 Readout Controllers. The readout generates approximately 12 bytes for each hit. CRV data is used in the offline reconstruction, so readout is only necessary for timestamps that have passed the tracker and calorimeter filters. The average rate depends on threshold settings.

- Extinction and Target Monitors – monitors will be implemented as standalone systems with local processing. Summary information will be forwarded to the DAQ for inclusion in the run conditions database and optionally in the event stream

- Tracker – 23,040 straw tubes, with 96 tubes per “panel”, 12 panels per “station” and 20 stations total. There are 240 Readout Controllers (one for each panel) located inside the cryostat. Straw tubes are read from both ends to determine hit location along the wire. The readout produces two TDC values (16 bits each) and typically six ADC values (10 bits each) per hit. The ADC values are the analog sum from both ends of the straw.

- Processing

The DAQ system provides online processing to perform calorimeter and tracker filters. The goal of these filters is to reduce the data rate by a factor of at least 100, limiting the offline data storage to less than 7 PetaByte/year. Based on preliminary estimates, the online processing requirement is approximately 30 TeraFLOPS. - Environment

The DAQ system will be located in the surface level electronics room in the Mu2e Detector Hall and connected to the detector by optical fiber. There are no radiation or temperature issues. The DAQ will however, be exposed to a magnetic fringe field from the detector solenoid at a level of ~20-30 Gauss.

Technical Design

The Mu2e DAQ is based on a “streaming” readout. This means that all detector data is digitized, zero-suppressed in front-end electronics, and then transmitted off the detector to the DAQ system. While this approach results in a higher off-detector data rate, it also provides greater flexibility in data analysis and filtering, as well as a simplified architecture.

The Mu2e DAQ architecture is further simplified by the integration of all off-detector components in a “DAQ Server” that functions as a centralized controller, data collector and data processor. A single DAQ Server can be used as a complete standalone data acquisition/processing system or multiple DAQ Servers can be connected together to form a highly scalable system.

To reduce development costs, the system design is based almost entirely on commercial hardware and, wherever possible, software from previous DAQ development and open source efforts.

DAQ / Trigger to do list (in progress)

- DAQ - Timing Distribution (Greg Rakness):

- learn the interfaces to/from the Mu2e Timing distribution circuit board (MTC).

- learn how the MTC is used by the CFO, DTC, and Monitors.

- learn the interfaces to the detector ROC prototypes that are available for slice testing.

- learn how to connect and mockup different scenarios by connecting optical fibers and copper cables to the MTC, ROC, and measurement equipment.

- learn how to take measurements for the different scenarios and record results. The measurements include jitter, bit error rate, and reproductibility.

- infer what the implications of measurements results are on reconstruction and on the physics for Mu2e.

- DAQ - Event Building (DAQ team):

- learn how to run the test suite of DAQ software to force data through the event building network.

- learn how to measure throughput using diagnostic software tools.

- learn how to measure bit error rate using diagnostic software tools.

- iterate on ideas to improve throughput and bit error rates that may include firmware modifs., network switch config., cable length/type/brand permutations, or data protocol changes.

- DAQ - Graphical User Interfaces (GUIs):

- Make the experiment-specific web interfaces for controlling DAQ/slow controls/everything.

- Help design look and feel. Test security and bugs.

- Integrate slow control displays into web interface, design and implement slow control displays. This involves a lot of communication with detector subsystems.

- TRG - Track trigger (D. Brown, R. Bonventre, G. Pezzullo, B. Echenard):

- Re-implement Peak-Ped charge computation

- Reduce/eliminate FlagBkgHits MVA dynamic memory allocation

- Faster atan2f implementation

- Improve piecewise linear interpolation in StrawResponse (calibration application)

- Replace FlagBkgHits / TimeClusterFinder MVA (ANN) with Fisher, cuts, ...

- Improve circle fit stability / convergence / speed (median -> mean?)

- Re-implement POCA, Helix functions in single-precision in KalSeed fit

- Hit timing (TOT) improvement - TRG - Track-calo trigger (G. Pezzullo + Yale group):

- Integrate with the track trigger sequence

- include positive tracks - TRG - Calo / track-calo (S. di Falco):

- Use common fast clustering reconstruction

- Improve performance (ANN vs BDT, fast BDT implementation?) - TRG - Calibration trigger:

- low-energy DIO

- Any other trigger? - TRG - Off-spill triggers:

- develop cosmic triggers

- other off-spill triggers

- performance tests with off-spill sample - TRG - Full trigger menu (G. Pezzullo):

- implement fcl chain

- define trigger payload

[1] Tschirhart, R., “Trigger and DAQ Requirements,” Mu2e-doc-1150