POMS: Difference between revisions

(→Tutorial: cfg file) |

|||

| Line 6: | Line 6: | ||

* analysis of logs and database entries for results | * analysis of logs and database entries for results | ||

POMS has been successfully employed for the production of the [[MDC2020]] datasets. | POMS has been successfully employed for the production of the [[MDC2020]] datasets. The supported and recommended way to access POMS is through the [https://pomsgpvm01.fnal.gov/poms/index/mu2e/production web interface]. | ||

[https://cdcvs.fnal.gov/redmine/projects/project-py/wiki/Project-py_guide project-py] is | [https://cdcvs.fnal.gov/redmine/projects/project-py/wiki/Project-py_guide project-py] is a Python script which provides a command line interface. | ||

==Tutorial== | ==Tutorial== | ||

Revision as of 19:51, 27 July 2022

Introduction

The Production Operations Management Service (POMS) is a computing division tool that helps users to run large and complex grid campaigns. It provides

- control scripts and GUI interface

- chaining of multiple stages of a grid campaign

- re-submission of failed jobs

- analysis of logs and database entries for results

POMS has been successfully employed for the production of the MDC2020 datasets. The supported and recommended way to access POMS is through the web interface. project-py is a Python script which provides a command line interface.

Tutorial

Work in progress

The POMS system is designed around the concept of campaign. A campaign is a set of stages which can have interdependencies. Each stage corresponds to the submission of a certain number of jobs to the computing grid, with a specific configuration. Each stage typically takes as input an entire SAM dataset and produces one or more SAM datasets as output, which contain the output files produced by each job.

In this tutorial we will create a campaign with two stages, whose goal is to run the reconstruction stage on a pre-existing digi sample. This campaign can be then easily extended to run an arbitrary number of stages (e.g. generation, digitization, and reconstruction).

The first step is to create a proxy certification that will be then used by POMS:

setup fife_utils

kx509 -n --minhours 168 -o /tmp/x509up_voms_mu2e_Analysis_${USER}

upload_file /tmp/x509up_voms_mu2e_Analysis_${USER}

A POMS campaign requires two files: a INI file, which defines the stage names and the interdependencies, and a CFG file, which describes the configuration for each stage.

INI file

Let's start with the INI file: it can be created locally and it is then uploaded to POMS through the web interface. In the following INI file we will specify two stages: the first one creates the FCL files, one per job, and the second one takes as input the SAM dataset containing the FCL files and submit N jobs, where N is the number of FCL files in the dataset.

First of all, the INI file needs the definition of the campaign and of the job type we are going to run.

[campaign] experiment = mu2e poms_role = analysis name = srsoleti_tutorial campaign_stage_list = reco_fcl, reco [campaign_defaults] vo_role=Analysis software_version=MDC2020t dataset_or_split_data=None cs_split_type=None completion_type=complete completion_pct=100 param_overrides="[]" test_param_overrides="[]" merge_overrides=False login_setup=srsoleti_poms_login job_type=mu2e_reco_srsoleti_jobtype stage_type=regular output_ancestor_depth=1 [login_setup srsoleti_poms_login] host=pomsgpvm01.fnal.gov account=poms_launcher setup=setup fife_utils v3_5_0, poms_client, poms_jobsub_wrapper;

Then, we define two job types, one that corresponds to the stage that will run the mu2e process, and one that corresponds to the stage that will run the generate_fcl script, which creates the FCL files.

[job_type mu2e_reco_srsoleti_jobtype] launch_script = fife_launch parameters = [["-c ", "/mu2e/app/users/srsoleti/tutorial/reco.cfg"]] output_file_patterns = %.art recoveries = [["proj_status",[["-Osubmit.dataset=","%(dataset)s"]]]] [job_type generate_fcl_reco_srsoleti_jobtype] launch_script = fife_launch parameters = [["-c ", "/mu2e/app/users/srsoleti/tutorial/reco.cfg"]] output_file_patterns = %.fcl

Finally, we define the two stages that form our campaign, reco and reco_fcl.

[campaign_stage reco_fcl] param_overrides = [["--stage ", "reco_fcl"]] test_param_overrides = [["--stage ", "reco_fcl"]] job_type = generate_fcl_reco_srsoleti_jobtype [campaign_stage reco] param_overrides = [["--stage ", "reco"]] test_param_overrides = [["--stage ", "reco"]] [dependencies reco] campaign_stage_1 = reco_fcl file_pattern_1 = %.fcl

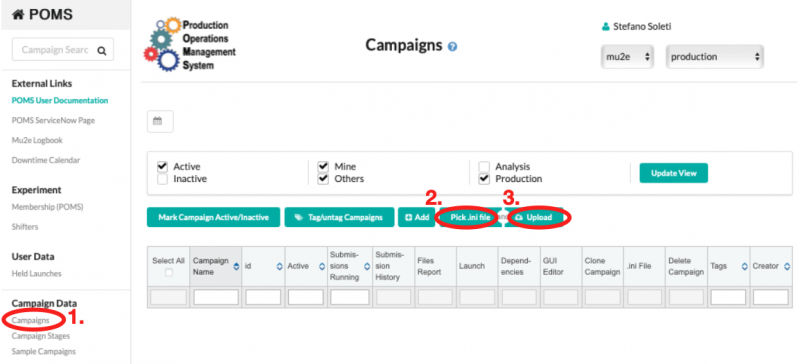

The file can then uploaded to POMS by connecting to POMS, clicking on "Campaigns", then "Pick .ini file", and "Upload", as shown in the picture below.

CFG file

The CFG file describes the configuration for each stage and it will essentially tell the grid node what to run and in which order. First, we start with a [global] where we define variables that will be used later in the file

[global]

group = mu2e

subgroup = highpro

experiment = mu2e

wrapper = file:///${FIFE_UTILS_DIR}/libexec/fife_wrap

submitter = srsoleti

outdir_sim = /pnfs/mu2e/tape/usr-sim/sim/%(submitter)s/

outdir_dts = /pnfs/mu2e/tape/usr-sim/dts/%(submitter)s/

logdir_bck = /pnfs/mu2e/tape/usr-etc/bck/%(submitter)s/

outdir_mcs = /pnfs/mu2e/tape/usr-sim/mcs/%(submitter)s/

primary_name = CeEndpoint

stage_name = override_me

artRoot_dataset = override_me

histRoot_dataset = override_me

override_dataset = override_me

release = MDC2020

release_v_i = r

release_v_o = t

desc = %(release)s%(release_v_o)s

db_folder = mdc2020t

db_version = v1_0

db_purpose = perfect

beam = 1BB

As you can see this is quite verbose. However, future CFG files can be made slimmer by using the includes statement in the [global] section, which import existing CFG files.

Now, we need to define the default job configuration. This can also be written in a separate CFG file and imported.

[submit]

debug = True

G = %(group)s

subgroup = %(subgroup)s

e = SAM_EXPERIMENT

e_1 = IFDH_DEBUG

e_2 = POMS4_CAMPAIGN_NAME

e_3 = POMS4_CAMPAIGN_STAGE_NAME

resource-provides = usage_model=DEDICATED,OPPORTUNISTIC

generate-email-summary = True

expected-lifetime = 23h

memory = 2500MB

email-to = %(submitter)s@fnal.gov

l = '+SingularityImage=\"/cvmfs/singularity.opensciencegrid.org/fermilab/fnal-wn-sl7:latest\"'

append_condor_requirements='(TARGET.HAS_SINGULARITY=?=true)'

f = dropbox:///mu2e/app/home/mu2epro/db_fcl_test/ucondb_auth.sh.tar.gz

[job_setup]

debug = True

find_setups = False

source_1 = /cvmfs/mu2e.opensciencegrid.org/setupmu2e-art.sh

source_2 = /cvmfs/mu2e.opensciencegrid.org/Musings/SimJob/%(release)s%(release_v_o)s/setup.sh

source_3 = $CONDOR_DIR_INPUT/ucondb_auth.sh

setup_1 = -B ifdh_art v2_14_06 -q e20:prof

setup_2 = -B mu2etools

setup_3 = -B sam_web_client

ifdh_art = True

postscript = find *[0-9]* -maxdepth 1 -name "*.fcl" -exec sed -i "s/MU2EGRIDDSOWNER/%(submitter)s/g" {} +

postscript_2 = find *[0-9]* -maxdepth 1 -name "*.fcl" -exec sed -i "s/MU2EGRIDDSCONF/%(desc)s/g" {} +

postscript_3 = find *[0-9]* -maxdepth 1 -name "*.fcl*" -exec mv -t . {} +

postscript_4 = [ -f template.fcl ] && rm template.fcl

[sam_consumer]

limit = 1

schema = xroot

appvers = %(release)s

appfamily = art

appname = SimJob

Now, we have to define the kind of output files (the "job outputs") created by our jobs. They are three: the .fcl files generated by the dedicated stage, the .tbz files containing the log of the mu2e process, and the .art files created by the mu2e process.

[job_output] addoutput = cnf.*.fcl add_to_dataset = cnf.%(submitter)s.%(stage_name)s.%(desc)s.fcl declare_metadata = True metadata_extractor = json add_location = True [job_output_1] addoutput = *.tbz declare_metadata = False metadata_extractor = printJsonSave.sh add_location = True add_to_dataset = bck.%(submitter)s.%(stage_name)s.%(desc)s.tbz hash = 2 hash_alg = sha256 [job_output_2] addoutput = *.art declare_metadata = True metadata_extractor = printJsonSave.sh add_location = True hash = 2 hash_alg = sha256

UconDB

mu2e_ucon_prod - created 11/2021 to help with MDC2020

- database mu2e_ucon_prod owned by nologin role mu2e_ucon_prod;

- Kerberos-authenticated roles: brownd kutschke srsoleti

- md5 authenticated role 'mu2e_ucon_web' (for POMS)

- port is 5458 (on ifdbprod/ifdb08)

https://dbdata0vm.fnal.gov:9443/mu2e_ucondb_prod/app/... - not cached, external and internal access http://dbdata0vm.fnal.gov:9090/mu2e_ucondb_prod/app/... - not cached, internal access only https://dbdata0vm.fnal.gov:8444/mu2e_ucondb_prod/app/... - cached, external and internal access http://dbdata0vm.fnal.gov:9091/mu2e_ucondb_prod/app/... - cached, internal access only

FCL files are saved to the UconDB in dedicated folders. It's not possible to use dots in the name of the database folders, so we replace with underscores, as in:

https://dbdata0vm.fnal.gov:9443/mu2e_ucondb_prod/app/UI/folder?folder=cnf_mu2e_pot_db_test_v12_fcl

The POMS FCL stages take care of creating the folders and saving the FCL files. The SAM location of the files stored in the database looks like this:

$ samweb locate-file cnf.mu2e.POT.db_test_v12.001201_00000001.fcl dbdata0vm.fnal.gov:/mu2e_ucondb_prod/app/data/cnf_mu2e_POT_db_test_v12_fcl

References

- POMS production top

- redmine

- DUNE video tutorial