Computing Concepts: Difference between revisions

No edit summary |

|||

| Line 72: | Line 72: | ||

The other histograms shown in the figure are important for the discussion of backgrounds and they are described at: https://mu2e-docdb.fnal.gov/cgi-bin/sso/ShowDocument?docid=39255. For a shorter discussion see [[BackgroundsPhysIntro]]. | The other histograms shown in the figure are important for the discussion of backgrounds and they are described at: https://mu2e-docdb.fnal.gov/cgi-bin/sso/ShowDocument?docid=39255. For a shorter discussion see [[BackgroundsPhysIntro]]. | ||

==EventIDs== | ==art, EventIDs, Runs and Subruns== | ||

Mu2e | Mu2e uses an event processing framework named ''art'' to manage the processing of events. It is supported by Fermilab Computing and is used by many of the Intensity Frontier experiments. You will learn more about ''art'' later in this document and in the [[ComputingTutorials]]. The ''art'' convention for uniquely labeling each event is to give it a unique art::EventId, which is a set of 3 positive integers named the Run number, the SubRun number and the Event number. | ||

The setting of the Run, SubRun and Event fields is under the control of the Mu2e Data Acquisition (DAQ) system. Mu2e has the following plan for how to use these features during normal data taking: | |||

* A run will have a duration between 8 and 24 hours. A new run will start with the run number incremented by 1 and with the SubRun and Event numbers both reset to 1. This will introduce about a 5 minute pause in data taking to reload firmware and restart some software. | |||

* A SubRun will have a duration of between 14 s and a few minutes. When a new SubRun starts, the SubRun number will be incremented by 1 and the Event number will be reset to 1. A SubRun transition will create no deadtime. | |||

* Within a SubRun, the Event number is monotonically increasing in time. | |||

The final choice for the duration of Runs and SubRuns will be made when we are closer to having data and we better understand the tradeoffs. When an anomaly occurs during data taking, we will sometimes decide to stop the run and start a new one in order to segregate the data that has the anomaly. So there will be some short runs. | |||

The key feature of a SubRun is that it must be short enough to follow the fastest changing calibrations. In HEP the name used for calibration information is “Conditions Data”, which is stored in a Conditions Database and managed by a Conditions System. For example Mu2e will record the temperature at many places on the apparatus in order to apply temperature dependent calibrations during data processing. Other calibration information might be determined from data, such as the relative timing and alignment of tracker and calorimeter. Some conditions information will change quickly and some will be constant over long periods of time. Information within the Conditions system is labeled with an Interval of Validity (IOV). Mu2e has made the choice that IOVs will be a range of SubRuns. You will not encounter the Conditions system in the early tutorials. | |||

When the Mu2e DAQ system starts a new Run it will record information about the configuration of the run and at the end of the run it will record statistics and status about the run. Similarly, when the Mu2e DAQ starts or ends a new SubRun it will record information about the configuration, statistics and status of the SubRun. Some of this information will be available in offline world via databases; other information will be added to the computer file that holds the event information. | |||

Analogous to the EventID, the ''art'' SubRunID is a 2-tuple of non-negative integers with parts named run number and subrun number. For completeness, ''art'' provides a RunID class that is just a non-negative integer. | |||

The DAQ system will ensure that EventIDs, SubRunIDs and RunIDs are monontonically increasing in time. | |||

==Sim and Reco== | ==Sim and Reco== | ||

Revision as of 15:17, 1 October 2023

Introduction

This page is intended for physicists who are just starting to work in the Mu2e computing environment. It explains a few jargon words in case this is your first exposure to computing High-Energy Physics (HEP). The jargon includes both language that is common throughout HEP and language that is specific to Mu2e. It supports ComputingTutorials

Online and Offline

The Mu2e software is used in two different environments, online and offline. Online refers to activities in the Mu2e Hall, such as Data Acquisition (DAQ) and Triggering, collectively known as TDAQ. Offline refers to activities that take place after the data has reached the Computer Center, such as reconstruction, calibration and analysis. Some software is used in both places and some software is used in only one or the other.

This writeup will focus on offline computing but will have some references to online computing because some important ideas originate in the online world.

On-spill and Off-spill

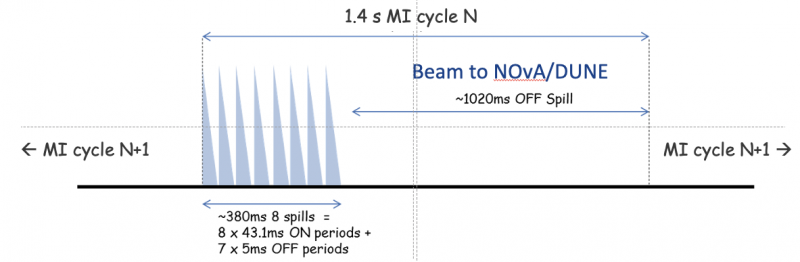

When Mu2e is in a steady state of data taking, there will be a repeating cycle that is 1.4 seconds long. The cycle is shown schematically in the figure below:

At the start of the cycle, the Fermilab accelerator complex will deliver a beam of protons to the Mu2e production target. The beam is structured as a series of pulses of nominally 39 million protons per pulse, separated by approximately 1695 ns; ideally there are no protons between the pulses. This continues for about 43.1 ms, or about 25,400 pulses. The period over which the 25,400 pulses arrive is called a spill. This will be followed by a brief period of about 5 ms when no beam is delivered to the production target. There are eight spills plus seven 5 ms inter-spill periods in the 1.4 s cycle. These are followed by a period of about 1.02 seconds during which no protons arrive at the target. A single pulse of nominally 39 million is correctly called a pulse; however it is sometimes called a bunch or a micro-bunch. For historical reasons those other terms are present through the Mu2e documentation and code.

This cycle of 1.4 s is an example of a Main Injector Cycle (MI cycle). The Main Injector is the name of one of the accelerators in the Fermilab complex. During the 1.020 s period with no beam to Mu2e, the Fermilab accelerator complex will deliver protons to the NOvA experiment (early in Mu2e) or the DUNE experiment (later in Mu2e). Towards the end of each 1.020 s period the accelerator complex will begin the preparatory steps to deliver the next spills of protons to Mu2e.

In normal operations this MI cycle will repeat without a break for about 1 minute. Then there will be a brief pause, perhaps 5 to 10 seconds during which other sorts of MI cycles are executed. One example is delivering protons to the Fermilab test beam areas, called MTEST. This whole process is called a super cycle.

When operations are stable Mu2e will run continuous repeats of this super-cycle. The explanation of why the MI-cycle and super-cycle are the way they are is outside of the scope of this writeup.

We define the term “on-spill” to mean during the 8 spills. We define the term “off-spill” to mean any time that the detector is taking data that is not on-spill. At this time Mu2e does not have widely agreed upon terms to differentiate several different notions of off-spill:

- During the 1.020 s off-spill period during an MI cycle

- During the seven 5 ms inter-spill periods within the MI cycle

- During the portion of the supercycle when the MI is not running a protons-to-Mu2e MI cycle.

- During an extended period of time, minutes to months, when the accelerator complex is not delivering protons to the Production Target.

Some groups within Mu2e do use language to make some of these distinctions but it is neither uniform nor widely adopted.

During off-spill data taking the Mu2e detector does some or all of the following. Some of these can be done at the same time but some require a special configuration of the data taking system.

- Measure cosmic ray induced activity in the detector.

- This is used to look for cosmic rays that produce signal-like particles and to measure the ability of the cosmic ray veto system to identify when this happens.

- This is also a source of data that we will use to calibrate the detector in-situ.

- Randomly readout portions of the detector to collect samples that can be used to measure what a quiet detector looks like. This is referred to as “pedestal” data.

- Perform dedicated calibration operations.

Doing all three well is important to the success of Mu2e.

1BB and 2BB

The previous section describes how Mu2e will operate after it has been fully commissioned. When Mu2e starts commissioning with beam, we will run a different MI-cycle. In this cycle there will only be 4 spills, each of longer duration, and the total number of protons on target (POT) during this MI cycle will be about 55% of that of the MI cycle described above. The reason for this is to have additional radiation safety margin.

The MI cycle defined above is called 2BB while the modified cycle is called 1BB. The BB is an acronym for Booster Batch. The Booster is part of the Fermilab accelerator complex and it feeds the Main Injector. A batch is the unit of protons transferred from the Booster to the Main Injector. You can infer from the above discussion that one Booster Batch is able to produce four spills. You can read more the delivery of the proton beam to Mu2e.

Events

The basic element of Mu2e data processing is an “event’, which is defined to be all of the data associated with a given time interval. For example when Mu2e is taking on-spill data, a proton pulse arrives at the production target approximately every 1695 ns. All of the raw data collected after the arrival of one proton pulse and before the arrival of the next proton pulse forms one event. When Mu2e processes our data, the unit of processing is one event at a time; each event is processed independently of the others.

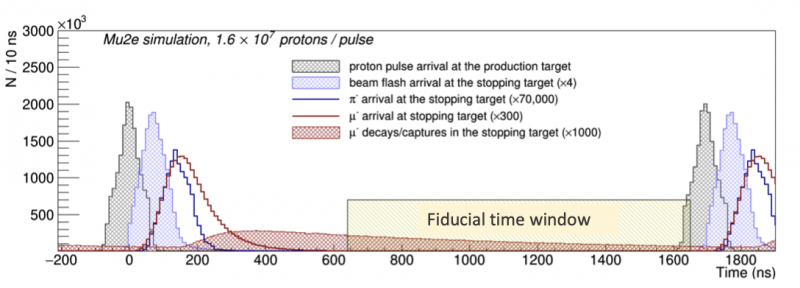

By convention Mu2e has chosen that each event will start at the middle of the proton pulse that defines the event start; that is, for each event the time t=0 is defined to be the middle of the proton pulse. This is illustrated by the figure below:

During off-spill cases, there is no external reference to define an event and Mu2e has made the following choice: off-spill events will have a duration of 100 μs and one will follow the other with no break between them. For off-spill events the time t=0 is simply the start of the event.

For on-spill events, some subsystems start recording data for an event at t=0 but other subsystems start recording data at a later time; in those subsystems the early-time data is dominated by backgrounds and are not of use for physics analysis. The figure below illustrates some of the timing within an event.

The horiztonal axis shows time, in ns, and the vertical axis shows the number of particles that arrive in a 10 ns window. The left side black hatched histogram shows the time profile of one proton pulse arriving at the production target at t=0. This is followed 1695 ns later with the time profile of the next proton pulse. The time profile of the proton pulse is a consequence of details of the Fermilab accelerator complex that are beyond the scope of this note. The solid red histogram shows the time at which negative muons stop in the stopping target. The time profile of the muon stopping times is the convolution of the proton pulse shape with the travel time from the Production Target to the Stopping Target. The caption says that the muon stopping time distribution is scaled up by a factor of 300. Note that the black histogram show time as measured at the production target while the red histogram shows time measured at the stopping target.

The hatched red area shows time at which a the muon in a muonic Al atom either decays or is captured on to the nucleus. It's time profile is the convolution of the muon stopping time distribution with the exponential decay of the bound state muon. The caption says that this is scaled up another factor of about 3.3 relative to the muon stopping time distribution. If Mu2e conversion occurs at a measurable rate, the shape of time profile of the signal will be the the same as red hatched area but the normalization will be very different. This is figure is drawn as one pulse in the middle of a spill of near identical pulses. On the left hand side you can see that muonic atoms produced during earlier pulses will still be decaying when this pulse begins.

The hashed box shows the fiducial time window for signal candidates. I really wanted a version of this figure with the live window, or live gate, the time during which the subsystems record data, but we don't have that handy. Most of the subdectors start recording data about 100 to 150 ns before the fiducial time window.

The other histograms shown in the figure are important for the discussion of backgrounds and they are described at: https://mu2e-docdb.fnal.gov/cgi-bin/sso/ShowDocument?docid=39255. For a shorter discussion see BackgroundsPhysIntro.

art, EventIDs, Runs and Subruns

Mu2e uses an event processing framework named art to manage the processing of events. It is supported by Fermilab Computing and is used by many of the Intensity Frontier experiments. You will learn more about art later in this document and in the ComputingTutorials. The art convention for uniquely labeling each event is to give it a unique art::EventId, which is a set of 3 positive integers named the Run number, the SubRun number and the Event number.

The setting of the Run, SubRun and Event fields is under the control of the Mu2e Data Acquisition (DAQ) system. Mu2e has the following plan for how to use these features during normal data taking:

- A run will have a duration between 8 and 24 hours. A new run will start with the run number incremented by 1 and with the SubRun and Event numbers both reset to 1. This will introduce about a 5 minute pause in data taking to reload firmware and restart some software.

- A SubRun will have a duration of between 14 s and a few minutes. When a new SubRun starts, the SubRun number will be incremented by 1 and the Event number will be reset to 1. A SubRun transition will create no deadtime.

- Within a SubRun, the Event number is monotonically increasing in time.

The final choice for the duration of Runs and SubRuns will be made when we are closer to having data and we better understand the tradeoffs. When an anomaly occurs during data taking, we will sometimes decide to stop the run and start a new one in order to segregate the data that has the anomaly. So there will be some short runs.

The key feature of a SubRun is that it must be short enough to follow the fastest changing calibrations. In HEP the name used for calibration information is “Conditions Data”, which is stored in a Conditions Database and managed by a Conditions System. For example Mu2e will record the temperature at many places on the apparatus in order to apply temperature dependent calibrations during data processing. Other calibration information might be determined from data, such as the relative timing and alignment of tracker and calorimeter. Some conditions information will change quickly and some will be constant over long periods of time. Information within the Conditions system is labeled with an Interval of Validity (IOV). Mu2e has made the choice that IOVs will be a range of SubRuns. You will not encounter the Conditions system in the early tutorials.

When the Mu2e DAQ system starts a new Run it will record information about the configuration of the run and at the end of the run it will record statistics and status about the run. Similarly, when the Mu2e DAQ starts or ends a new SubRun it will record information about the configuration, statistics and status of the SubRun. Some of this information will be available in offline world via databases; other information will be added to the computer file that holds the event information.

Analogous to the EventID, the art SubRunID is a 2-tuple of non-negative integers with parts named run number and subrun number. For completeness, art provides a RunID class that is just a non-negative integer.

The DAQ system will ensure that EventIDs, SubRunIDs and RunIDs are monontonically increasing in time.

Sim and Reco

Since we do not have data yet, we analyze simulation or sim events which are based on our expectation of what data will look like. We draw randomly from expected and potential physics processes and then trace the particles through the detector, and write out events. The interaction of particles in the detector materials is simulated with the geant software package. The simulated looks like the real data will, except it also contains the truth of what happened in the interactions.

The output of simulation would typically be data events in the raw formats that we will see produced by the detector readout. These are typically ADC values indicating energy deposited, or TDC values indicating the time of a energy deposit. In the reconstruction or reco process, we run this raw data through modules that analyze the raw data and look for patterns that can be identified as evidence of particular particles. For example, hits in the tracker are reconstructed into individual particle paths, and energy in the calorimeter crystals is clustered into showers caused by individual electrons. Exactly how a reconstruction module does its work is called its algorithm. A lot of the work of physicists is invested in these algorithms, because they are fundamental to the quality of the experimental results.

Coding

The Mu2e simulation and reconstruction code is written in c++. We write modules which create simulated data, or read data out of the event, process it, and write the results back into the event. The modules plug into a framework called art, and this framework calls the modules to do the actual work, as the framework reads an input file and writes an output file. The primary data format is determined by the framework, so it is called the art format and the file will have an extension .art.

We use the git code management system to store and version our code. Currently, we have one main git repository which contains all our simulation and reconstruction code. You can check out this repository, or a piece of it, and build it locally. In this local area you can make changes to code and read, write and analyze small amounts of data. We build the code with a make system called scons. The code may be built optimized (prof) or non-optimized and prepared for running a debugger (debug).

At certain times, the code is tagged, built, and published as a stable release. These releases are available on the /cvmfs disk area. cmfvs is a sophisticated distributed disk system with layers of servers and caches, but to us it just looks like a read-only local disk, which can be mounted almost anywhere. We often run large projects using these tagged releases. cmvfs is mounted on the interactive nodes, at remote institutions, on some desktops, and all the many grid nodes we use.

You can read more about accessing and building environment, git, scons and cvmfs.

Executables

Which modules are run and how they are configured is determined by a control file, written in fcl (pronounced fickle). This control file can change the random seeds for the simulation and the input and output file names, for example. A typical run might be to create a new simulation file. For various reasons, we often do our simulation in several stages, writing out a file between each run of the executable, or stage, and reading it in to start the next stage. A second type of job might be to run one of the simulation stages with a variation of the detector design, for example. Another typical run might be to take a simulation file as input and test various reconstruction algorithms, and write out reconstruction results.

Data products

The data in an event in a file is organized into data products. Examples of data products include straw tracker hits, tracks, or clusters in the calorimeter. The fcl is often used to decide which data products to read, which one to make, and which ones to write out. There are data products which contain the information of what happened during the simulation, such as the main particle list, SimParticles.

UPS Products

Disambiguation of "products" - please note that we have both data products and UPS products which unfortunately are both referred to as "products" at times. Please be aware of the difference, which you can usually determine from the context.

The art framework and fcl control language are provided as a product inside the UPS software release management system. There are several other important UPS products we use. This software is distributed as UPS products because many experiments at the lab use these utilities. You can control which UPS products are available to you ( which you can recognize as a setup command like "setup root v6_06_08") but most of this is organized as defaults inside of a setup script.

You can read more about how UPS works.

Histogramming

Once you have an art file, how to actually make plots and histograms of the data? There are many ways to do this, so it is important to consult with the people you work with, and make sure you are working in a style that is consistent with their expertise and preferences, so you can work together effectively.

In any case, we always use the root UPS product for making and viewing histograms. There are two main ways to approach it. The first is to insert the histogram code into a module and write out a file which contains the histograms. The second method is to use a module to write out an ntuple, also called a tree. This is a summary of the data in each event, so instead of writing out the whole track data product, you might just write out the momentum and the number of hits in the nutple. The ntuple is very compact, so you can easily open this and make histogram interactively very quickly.

Read more about ways to histogram or ntuple data for analysis.

Workflows

Designing larger jobs

After understanding data on a small level by running interactive jobs, you may want to run on larger datasets. If a job is working interactively, it is not too hard to take that workflow and adapt it for running on large datasets on the compute farms. First, you will need to understand the mu2egrid UPS product which is a set of scripts to help you submit jobs and organize the output. mu2egrid will call the jobsub UPS product to start your job on the farm. You data will be copied back using the ifdh UPS product, which is a wrapper to data transfer software. The output will go to dCache, which is a high-capacity and high-throughput distributed disk system. We have 100's of terabytes of disk space here, divided into three types (a scratch area, a persistent disk area, and a tape-backed area). Once the data is written, there are procedures to check it and optionally concatenate the files and write them tape. We track our files in a database that is part of the SAM UPS product. You can see the files in dCache by looking under the /pnfs filesystem. Writing and reading files to dCache can have consequences, so please understand how to use dCache and also consult with an experienced user before running a job that uses this disk space.

Grid resources

Mu2e has access to a compute farm at Fermilab, called Fermigrid. This farm is several thousand nodes and Mu2e is allocated a portion of the nodes (our dedicated nodes). Once you have used the interactive machines to build and test your code, you can submit a large job to the compute farms. You can get typically get 1000 nodes for a day before your priority goes down and you get fewer. If the farm is not crowded, which is not uncommon, you can get several times that by running on the idle or opportunistic nodes.

Mu2e also has access to compute farms at other institutions through a collaboration called Open Science Grid (OSG). It is easy to modify your submit command to use these resources. We do not have a quota here, we can only access opportunistic nodes, so we don't really know how many nodes we can get, but it is usually at least as much as we can get on Fermigrid. This system is less reliable than Fermigrid so we often see unusual failure modes or jobs restarting.

Your workflow

Hopefully you now have a good idea of the concepts and terminology of the Mu2e offline. What part of the offline system you will need to be familiar with will depend on what tasks you will be doing. Let's identify four nominal roles. In all cases, you will need to understand the accounts and authentication.

- ntuple user. This is the simplest case. You probably will be given a ntuple, or a simple recipe to make an ntuple, then you will want to analyze the contents. You will need to have a good understanding of c++ and root, but not much else.

- art user. In this level you would be running art executables, so you will also need to understand modules, fcl, and data products. Probably also how to make histograms or ntuples from the art file.

- farm user. In this level you would be running art executables on the compute farms, so you will also need to understand the farms, workflows, dCache, and possibly uploading files to tape.

- developer. In this case, you will be writing modules and algorithms, so you need to understand the art framework, data products, geometry, c++, and standards in some detail, as well as the detector itself.