User:Sophie/Sim Gen Pileup

Channel Description

Pile-up relates to beam related “backgrounds” which can produce extraneous hits in our detectors. This can cause dead time and effect reconstruction if not properly understood. All our pile-up models are currently derived from Monte Carlo, with some preliminary knowledge of the incoming beam composition and structure. All current simulations assume a tungsten production target, with 8GeV protons. As we begin commissioning we will re-model our pile-up using data-driven measurements.

Simulating the Mu2e Beam

The follow description is for the MDC2020 style campaign. As our workflows evolve this should be updated.

Literature

Most of the references are internal:

- Werkema proton pulse = Mu2e Document 32620-v2

- Genser range = Mu2e Document 36977-v1

- Edmonds muon pileup = Mu2e Document 35561-v1

- ALCAP=https://arxiv.org/abs/2110.10228

Muon Beam

In the beam campaign, we start by simulating protons hitting the production target (POT). Currently the protons are modeled as a monochromatic beam directed at the target face center with no angular divergence and a Gaussian transverse spread, with sigma set by accelerator studies (see [Werkema proton pulse] for details). In this stage, Geant4 is configured with a relative long minrange cut to speed the simulation. Dedicated studies ([Genser range]) showed this coarser cut has negligible impact on the accuracy of the final particles that interact with the detector. In addition, the magnetic field map used in this stage includes only the DS and TS, to save memory footprint. Geant simulation is stopped when the particles exit the TS, using an amalgamation of stopping volumes, so this truncated field map has no impact on the results.

Several configurations of the beam stage were provided. The primary configuration was used for simulations of the main Mu2e detectors; Tracker, calorimeter, and CRV. In this configuration, only particles (SimParticles) exiting the Transport Solenoid (TS) were saved, with their position and kinematics at their point of exit, as a virtual detector StepPointMC, together with all the precursor particles involved in generating these particles, going back to the primary proton. Based on the goal of simulating the estimated statistics of the run1 dataset, and the downstream resampling factors, a total of 10^8 POTs were simulated in the main MDC2020 beam campaign, run in SimJob Musing MDC2020p.

Another beam configuration was used for studying particle transport in the TS, where the full Monte Carlo trajectory of all particles were saved. This configuration was used primarily for making event displays, and no large production sample was produced.

Another configuration saved particles entering the extinction monitor, or the extinction monitor region. This configuration was tested to be functional, but no ExMon data sets were produced in MDC2020, as the extinction monitor detector details were not considered to be accurate enough at the time productions were run.

The secondary simulation particles produced by the standard beam campaign are filtered and sorted into two output collections; beam and neutrals. Charged particles that reach the entrance of the DS are stored in the ‘beam’ collection. Neutral particles that enter the DS, or any kind of particle that exits through the TS sides (almost fully neutral), are stored in the ‘neutrals’ collection. During concatenation the ‘beam’ collection is further split into muons (positive and negative), and other charged particles (almost exclusively electrons). The muons collections are used (eventually) for modeling signal processes and muon-induced pileup. The electrons are used to model beam flash backgrounds. The neutrals collection is used to model pileup in the CRV and other detectors.

Pions

The pion beam campaign is separate from the main beam campaign and is used for pion physics studies only, e.g. RPC background simulations. In order to acquire a large amount of statistics at the stopping target the pion lifetime is set as infinite. The proper time is then saved as an event weight and applied at analysis level. The pion beam campaign is similar to the muon campaign. It involves a protons-on-target stage, a resampling stage, a charge selector stage and a stops stage. The pion stops can then be fed into the RPC (internal and/or external) generator module in a similar way as the muons are used for the stopped muon processes. The digitization and reconstruction of the electrons/positrons should be the same as for conversion electrons. For a mixed sample the standard frames (i.e. those for the muon campaign) are used.

Pile Up

Pile up refers to the particles produced by particles unrelated to the signal (primary) particle of interest to physics analysis, such as a conversion electron. Pile up is an inevitable consequence of the large flux of muons we must produce to be sensitive to very small physics signals. Pile up particles create backgrounds in the detector which can affect detector readout, triggering, and reconstruction, and so must be simulated as part of the event to obtain accurate results. Pile up is an irreducible source of detector background, it is produced by the same beam particles as the signals we are searching for. Note that both the signal particle production probability and the pileup production rate are proportional to the number of primary protons produced in a single event, a correlation which must be accounted for when analyzing simulated data.

The pileup campaign produces three direct (electron beam, muon beam, and neutrals) and indirect stops (muon target, ipa stops) streams of pileup. These four streams are combined to produce the complete pileup model during mixing. Due to the streamlining and simplification of the preceding stages, this represents a big decrease in computational burden and complexity compared to MDC2018, where a complete pileup model required 14 separate input streams.

To produce the direct pileup, the particles recorded in the beam campaign are resampled, and the Geant4 simulation of those is resumed from the point at which they were stopped. For the muon and electron beam samples, this is at the entrance to the DS. For the neutrals, it is wherever they exited the beamline. Note that there is a small discrepancy between the point at which the neutrals resampling begins (at the last recorded G4 step point) and where the input stream filtering was applied (at the exit of the relevant volume). The impact of this discrepancy was tested and found to be small).

The resampled pileup particles are allowed to interact with the detector, and the resultant G4 energy deposition in the detectors is summarized in the form of DetectorStep objects, described below. These DetectorSteps are used as secondary stream inputs in the mixing campaigns described below.

The Pile up Campaign consists of three stages: resampling, stopping and selection. The stopping stage builds separate collections of particles which reach two places: the muon stopping target (target) and the inner proton absorber (IPA). These stops can be taken as input into any of the primary physics simulations. The stops selector stage splits the muons (or pions) into two streams by their charge. The mu-minus target stops are then fed to a pileup stage (MustopPileup.fcl). The output of the pileup stage is fed into the mixing (see below) stage along with the output of a primary campaign and muon and electron beam flash and neutral flash stage outputs to simulate a mixed sample for a given physics process.

In earlier campaigns (CD3, MDC2018, SU2020), each possible daughter product of a target-stopped muon decay or capture (DIO electron, photon, neutron, proton, …) was modeled as an independent physics process, by running a dedicated job with a dedicated output streams running separate filters. This resulted in a lot of of output collections that had to be collated, a lot of book-keeping to make sure these separate samples were each mixed with the correct factor to correspond to the same number of stopped muons, a lot of configuration for the mixing stage, and a lot of IO (the MDC2018 mixing phase read 5 separate mustop pileup streams). The advantage of simulating separate daughter streams was it allowed the flexibility to modify a single daughter production distribution or rate during mixing, without having to rerun the entire pileup campaign.

Rates for stopped-muon daughter products are now relatively well-measured (see [ALCAP] for example). Mu2e never made use of the ability to rescale or remake pileup samples in MDC2018. Consequently, MDC2020 used a new model for generating pileup background the decay and capture of target stopped muons, where each muon produced a combined set of daughter products, producing a single output stream. This greatly reduces the complexity of the pileup and mixing campaigns, the bookkeeping, and the IO. Currently, each pileup daughter process is modelled independently, as there is no data on correlations. Details of the stopped-muon pileup generator can be found in [Edmonds muon pileup].

In earlier simulation campaigns, pile up samples were generated without explicitly sampling the proton bunch time profile or the muon lifetime, instead assuming a nominal time. That allowed additional randomisation to be applied in the mixing step, as the same detector information could be shifted according to different samplings of those distributions. It also allowed applying different time profiles in downstream stages. Since then, understanding of the expected shape of the proton bunch time profile has improved significantly (see [Werkema proton pulse]), and it was decided that this additional degree of flexibility was not needed. Also, post-analysis of the MDC2018 data showed that the additional randomization provided by resampling the proton bunch profile was insignificant compared to the intrinsic randomization of resampling itself. The randomisation of the muon lifetime was also found to be unimportant, as there are plenty of statistics for that stream, with large randomisation through the decay and capture process daughter production.

Consequently, MDC2020 pile up samples were produced with fixed samplings of the proton bunch time profile and the muon lifetime. This simplified both the production workflow, and the downstream (analysis) workflows, as the MC true times no longer need to be adjusted for the specific proton bunch time or muon decay time.

The MDC2020 pile up sample production targets were chosen to allow a production of sample the full Run1 statistics of mixed events with acceptable oversampling, within the practical limits of our available computing resources. Oversampling in MDC2018 pileup, particularly of neutrals, resulted in large statistical artifacts when evaluating CRV pileup background rates.

Given the rarity with which particles from the neutrals and electron beam streams produce signals in mu2e detectors, these streams required the most statistics to produce unbiased samples, and consequently dominated the computing resources needed for MDC2020. Due to the need to resample sequentially, the potential for statistical artefacts was increased, especially for processes that involve the random combination of detector signals generated by separate particles (random coincidences). This is especially apparent in the elebeam and neutrals pile up stream.

Several tricks are used to mitigate the static correlations resulting from sequential sampling of the mixing streams. First, the mixin outputs were split into many collections, with each mixing job reading ~ 10 files from each. The generate_fcl script then picks a random subset and file order for each mixing job, increasing the randomness. When reading the pileup mixin streams, the first event read is chosen randomly from within the average range of the number of events per file, separately for each stream. These tricks randomize both the mixing from job to job, and from stream to stream within the jobs, with essentially 0 computational overhead on either the mixin production job or mixing job. To pick a random first event from the first stream file requires knowing the (average) number of events per file. That information is extracted from the SAM database at the time the scripts used to perform the mixing are generated, see gen_Mix below for details.

In the most statistically-limited pileup streams (neutral and elebeam), a further trick is applied to increase the randomness during the concatenation stage. Once the Geant4 processing is complete, the concatenation is run multiple (10) times on the output, randomizing the (same) set of inputs each time. This creates a static input stream with additional randomness on a smaller scale than a single output file. Note that there is no overcounting due to pileup stream multiple concatenation, since all of those events are all read many times during the mixing resampling campaign anyways. While multiple concatenation does improve the randomisation and reduce statistical artefacts coming from multi-particle random coincidences, it does nothing to improve the statistical oversampling due to resampling coincidences generated by the same particle.

The high-statistics pile up samples intended for use as inputs for simulating mixed datasets using normal (1BB and 2BB) beam intensities apply a time cut at the detector step level, set to 250 ns past the proton bunch arrival time. This cut reduces the payload of some streams by a large factor, around 100 for the electron beam, and ~10 for the neutrals. It has no effect on the stopped muon pileup stream, as those detector steps include the muon lifetime. This cut has no effect on the mixing simulation output, due to the digitisation time cut of 450 ns applied in those jobs.

Workflow

The beam and pile up campaigns are currently part of Mu2e/Production. The full beam campaign is CPU intensive and only occasionally (once for a specific beam/target configuration).

In general, it is advised that if you require a beam/pile-up campaign you should use the existing MDC2020p sample. If you require a new campaign (because something has changed in the geometry of the target for example) you should talk with the Production Manager to configure the campaign and run it.

Campaigns

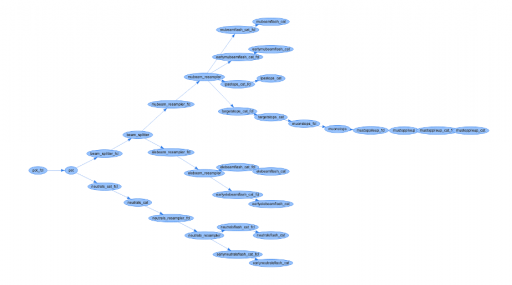

The figure below shows the POMs campaign associated with the beam/pile-up streams. It begins with protons on target (POT.fcl).

.

The output of this is split into two streams: neutrals and charged particles. The neutrals is stored for later mixing.

The charged beam sample is further split by the BeamSplitter.fcl. This produces an electron and muon beam sample of both charges. The muon beam is further split and resampled. Muons which stop in the target or IPA are separated and the remaining muons become part of the muon beam stream.

There is further splitting from the target stop stream to produce a muon pile up stream. This consists of DIOs and Capture backgrounds.

Mixing

While the muon stopped are taken as input to produce muon primaries (dio, conversions, RMC if we are using target stops) the remaining streams are not utilised until the "mixing" stage.

The mixing job can be configured using the gen_Mix.sh script:

gen_Mix.sh --primary CeEndpoint --campaign MDC2020 --pver ah --mver p --over ah --pbeam Mix1BB --dbpurpose perfect --dbversion v1_3

This tells the script that the used wants to make a set of CeEndpoints, using existing detector step file labeled as MDC2020ah. The user is requesting the script to use the stops campaign labelled MDC2020p (currently the most up to date). The "pbeam" argument tells the script to assume a 1BB (1 booster batch beam). The script then uses Production/JobConfig/mixing/OneBB.fcl if set to 2BB it would use the corresponding script. The OneBB.fcl contains the following:

physics.producers.PBISim.SDF: 0.6 physics.producers.PBISim.extendedMean: 1.58e7 # mean of the uncut distribution physics.producers.PBISim.cutMax: 9.48e7 # truncate the tail at 6 times the mean

TwoBB.fcl contains instead:

physics.producers.PBISim.SDF: 0.6 physics.producers.PBISim.extendedMean: 3.93e7 # mean of the uncut distribution physics.producers.PBISim.cutMax: 2.36e8 # truncate the tail at 6 times the mean

The mixing job will run the MixBackgroundFrames module for each input stream: Offline/EventMixing/src/MixBackgroundFrames_module.cc.

This module produces "background frames" that mimic Mu2e microbunch-events from single particle simualtion inputs. The number of particles to mix is determined based on the input ProtonBunchIntensity object that models beam intensity fluctuations. There is a random Poisson process that is on top of the beam intensity fluctuations, which represents the probability of a secondary from a given proton creating a hit in a collection to be mixed. This Poisson is sampled by the module.

Contacts

- Current generators: Dave Brown, Andy Edmonds

- Mixing: Andrei Gapanenko (MixBackgroundFrame)

- Production: Yuri Oksuzian (general), Sophie Middleton (pions)