ProductionProceduresMC: Difference between revisions

| Line 62: | Line 62: | ||

json file index 4 looks like: | json file index 4 looks like: | ||

{ | |||

"desc": "ensembleMDS1eOnSpillTriggered-noMC", | "desc": "ensembleMDS1eOnSpillTriggered-noMC", | ||

"dsconf": "MDC2020au_best_v1_3", | "dsconf": "MDC2020au_best_v1_3", | ||

Revision as of 17:14, 1 May 2025

Introduction

This document outlines the procedures for running Monte Carlo (MC) production with POMS. MC jobs fall into two categories:

Simple jobs

- Process a single input file to produce one output using a standard FCL template.

- Examples: digitization, reconstruction, event ntupling.

This jobs are driven are driven by Production/Scripts/run_RecoEntuple.py script (needs a name change). The output from particular datasets needs to be saved on persistent area to avoid small files on tape. At the later stage, small files will have to be concatanated and saved on tape.

An example of POMS campaign that digitizes all the primaries: https://pomsgpvm02.fnal.gov/poms/campaign_stage_info/mu2e/production?campaign_stage_id=24194

Stage parameters are defined as such:

Param_Overrides = [ ['-Oglobal.dataset=', '%(dataset)s'], ['--stage=', 'digireco_digi_list'], ['-Oglobal.release_v_o=','au'], ['-Oglobal.dbversion=', 'v1_3'], ['-Oglobal.fcl=', 'Production/JobConfig/digitize/OnSpill.fcl'], ['-Oglobal.nevent=', '-1'], ]

- %(dataset)s – Internal POMS dataset placeholder. For each submission, POMS generates slices named like

dts.sophie.ensembleMDS2a.MDC2020at.art_slice_72935_stage_5 and substitutes the slice name for %(dataset)s.

- digireco_digi_list – The stage definition loaded from

/exp/mu2e/app/users/mu2epro/production_manager/poms_includes/mdc2020ar.cfg

- All other parameters are passed as arguments to the `run_RecoEntuple.py` script within this stage.

The split types that we use are:

Split Type: drainingn(500)

, which is described through `Edit Campaign Stage` and in POMS docs:

This type, when filled out as drainign(n) for some integer

n, will pull at most n files at a time from the dataset

and deliver them on each iteration, keeping track of the

delivered files with a snapshot.

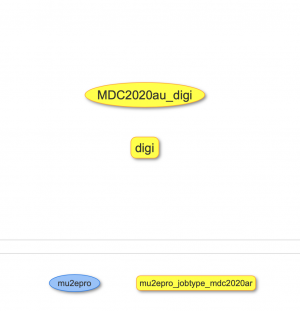

To modify campaign, the preferred option is to use GUI editor on the main page, which will bring you the below:

Then double click on digi cell to modify campaign parameters

Complex jobs

- Require unique, job-specific parameters and configurations.

- Examples: stage-1 processing, stage-2 resampling, mixing.

Primaries

We resample primary from particle stops. We use gen_Resampler.sh to produce a parameter file

Merging

Example:

gen_Merge.sh --json Production/data/merge_filter.json --json_index 4

json file index 4 looks like:

{

"desc": "ensembleMDS1eOnSpillTriggered-noMC",

"dsconf": "MDC2020au_best_v1_3",

"append": ["physics.trigger_paths: []", "outputs.strip.fileName: \"dig.owner.dsdesc.dsconf.seq.art\""],

"extra_opts": "--override-output-description",

"fcl": "Production/JobConfig/digitize/StripMC.fcl",

"dataset": "dig.mu2e.ensembleMDS1eOnSpillTriggered.MDC2020aq_best_v1_3.art",

"merge-factor": 1,

"simjob_setup": "/cvmfs/mu2e.opensciencegrid.org/Musings/SimJob/MDC2020au/setup.sh"

}

, and essentially sets the parameter for gen_Merge.sh

will produce:

cnf.mu2e.ensembleMDS1eOnSpillTriggered-noMC.MDC2020au_best_v1_3.0.tar cnf.mu2e.ensembleMDS1eOnSpillTriggered-noMC.MDC2020au_best_v1_3.fcl

fcl file can be used for testing If happy upload par file to disk:

gen_Merge.sh --json Production/data/merge_filter.json --json_index 4 --pushout

Current datasets

You can check recent datasets using listNewDatasets.sh The current datasets are also available: https://mu2ewiki.fnal.gov/wiki/MDC2020#Current_Datasets These webpage are geneted by nightly cron jobs: /exp/mu2e/app/home/mu2epro/cron/datasetMon/