DQM

Data Quality Monitoring

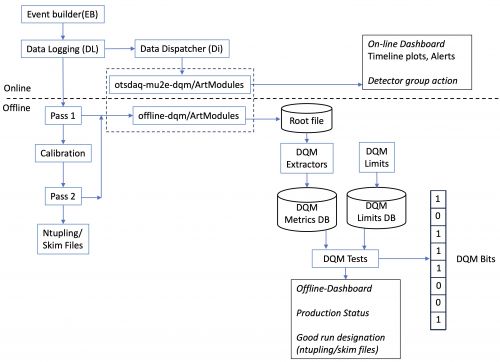

Data Quality Monitoring (DQM) is the process of running checks on new data as it comes in and is processed. These checks happen automatically during data acquisition (DAQ) and during automated processing. Detector experts also perform data quality checks in parallel to the automated checks using custom software. The scope of the checks and the response to the DQM results depend on the situation as seen in the table below. DQM is a critical part of the Mu2e computing infrastructure as described in the Mu2e Offline Computing Model (Mu2e-doc-48677).

| Operation | Scope | Level |

|---|---|---|

| DAQ | Do I need to stop the DAQ? | Acute Failure |

| Pass1 | Do I need to stop the DAQ? | Chronic Failure |

| Pass2 | Do I need to update calibrations? | Slow Drift |

During DAQ, the main goal of DQM is to make sure that the detector hardware and data acquisition system are functioning as expected. DQM need to assess component health in real time to alert personnel to acute hardware failures that would require stopping the data taking to assess and fix a problem. The details of the online-DQM can be found here.

During the offline pass1 and pass2 reconstruction jobs, DQM histograms for each detector system will be created and saved. To evaluate the status of the data quality, we expect two general approaches. First, extracting a set of simple numbers know as metrics (such as the mean number of hits on a track) that would be sensitive to overall detector performance and data quality. These quantities will be saved in a database and plotted as a function of time or run number. The second general approach is to compare the histograms to previous runs, including perhaps quantitative comparison, such as a χ2. Generally, the Offline operations and online shift crews would be responsible for reviewing the DQM dashboards to spot unexpected changes and drive fixes. The limits for the offline metrics will be stored in a database and used to create automatic tests of metric values that can send alarms and warnings automatically. Finally, the DQM content will be used to determine good runs through the creation of DQM bits and the good-run list tools.

|

In a little more detail, we expect the data to be moved from the DAQ system offline as soon as possible. The data will come in several datasets, such as electron triggers, or off-spill cosmics. The offline system will run pass1 reconstruction on these streams of data. Pass1 will be an initial look at the data, and to prepare histograms and ntuples for the calibration process. After the pass1 reco file is produced, a DQM exe will be run it. The DQM exe will produce a DQM histogram file which will primarily contain histograms of fundamental quantities. These DQM histogram files will be saved and written to tape. When the DQM files are available, a few simple bins, called extractors, will run on the histogram file and write out text files containing DQM metrics, such as the average cluster energy, the average number of hits on a track, or the number of defective channels detected. Another bin (dqmTool) will insert these metrics into the permanent database. When it is possible, an aggregator will add together all the histogram files related to one run, producing a DQM histogram file for the run. This file will also be saved, the extractors run, and the metrics saved. The same sort of aggregation might be done for a weekly report, or other purposes. The DQM histograms and the extracted metrics, presented as timelines, can be monitored by operations and shift crews to detect problems in the detector, data format, conditions or code, and lead to quick corrections. The same procedure will occur for pass2 reconstruction, and possibly other procedures, such as nightly code validation.

File names

DQM file names will have a specific pattern: