DQM

Data Quality Monitoring

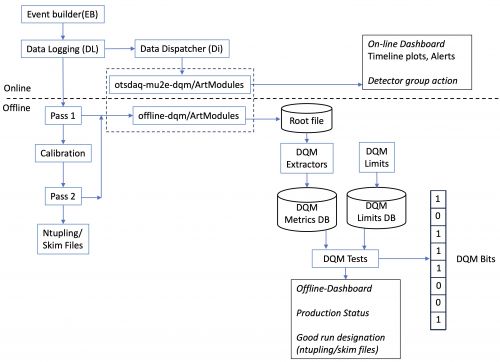

Data Quality Monitoring (DQM) is the process of running checks on new data as it comes in and is processed. These checks happen automatically during data acquisition (online-DQM) and during automated data processing (offline-DQM). Detector experts also perform data quality checks in parallel to the automated checks using custom software. The scope of the checks and the response to the DQM results depend on the situation as seen in the table below. During data acquisition (DAQ), the main goal of DQM is to make sure that the detector hardware and data acquisition system are functioning as expected. DQM processes need to assess component health in real time to alert personnel to acute hardware failures that would require stopping the data taking to assess and fix a problem. During offline processing (pass1) the goal of DQM is to look for "slow" failures using timelines of detector metrics that monitor stability of operation. Pass1 DQM is also provides a redundant check of critical online quantities. Pass2 DQM is used for validation of detector calibrations and final processing checks. DQM is a critical part of the Mu2e computing infrastructure as described in the Mu2e Offline Computing Model (Mu2e-doc-48677).

| Operation | Scope | Level |

|---|---|---|

| Online - DAQ | Do I need to stop the DAQ? | Acute Failure |

| Offline - Pass1 | Do I need to stop the DAQ? | Chronic Failure |

| Offline - Pass2 | Do I need to update calibrations? | Slow Drift |

Offline DQM

To validate the data processing - as well as provide redundancy for critical online quantities - DQM histograms for each detector system will be created and saved during the offline pass1 and pass2 reconstruction jobs. To evaluate the status of the data quality, we expect two general approaches: timelines and histogram comparisons. For timeline, a set numbers know as metrics (such as the mean number of hits on a track) that would be sensitive to overall detector performance and data quality are extracted. These quantities are saved in a database and plotted as a function of time or run number. The second general approach is to compare the histograms to previous runs, including perhaps quantitative comparison, such as a χ2. Generally, the offline operations and online shift crews would be responsible for reviewing the DQM dashboards to spot unexpected changes and drive fixes. The limits for the offline metrics will be stored in a database and used to create automatic tests of metric values that can send alarms and warnings automatically. Finally, the DQM content will be used to determine good runs through the creation of DQM bits and the good-run list tools.

|

In a little more detail, we expect the data to be moved from the DAQ system offline as soon as possible. The data will come in several datasets, such as electron triggers, or off-spill cosmics. The offline system will run pass1 reconstruction on these streams of data. Pass1 will be an initial look at the data, and to prepare histograms and ntuples for the calibration process. After the pass1 reco file is produced, a DQM exe will be run it. The DQM exe will produce a DQM histogram file which will primarily contain histograms of fundamental quantities. These DQM histogram files will be saved and written to tape. When the DQM files are available, a few simple bins, called extractors, will run on the histogram file and write out text files containing DQM metrics, such as the average cluster energy, the average number of hits on a track, or the number of defective channels detected. Another bin (dqmTool) will insert these metrics into the permanent database. When it is possible, an aggregator will add together all the histogram files related to one run, producing a DQM histogram file for the run. This file will also be saved, the extractors run, and the metrics saved. The same sort of aggregation might be done for a weekly report, or other purposes. The DQM histograms and the extracted metrics, presented as timelines, can be monitored by operations and shift crews to detect problems in the detector, data format, conditions or code, and lead to quick corrections. The same procedure will occur for pass2 reconstruction, and possibly other procedures, such as nightly code validation.

Monitors

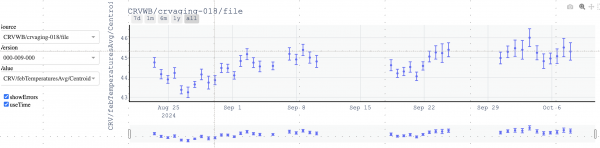

The DQM monitoring software is part of the Pass1 musing. The timelines can be viewed on or off-site through a web browser by using the timeline command. If you are off-site, then you must establish a tunnel to a Fermilab based computer using the following steps

ssh -AKX -L 8050:127.0.0.1:8050 -l <username> mu2egpvm0<n>.fnal.gov mu2einit muse setup Pass1 source /cvmfs/mu2e.opensciencegrid.org/env/ana/current/bin/activate export SSL_CERT_FILE=/etc/pki/tls/cert.pem timeline

Once the timeline app is running and you get the message "Dash is running on http://127.0.0.1:8050/", open your local browser to http://127.0.0.1:8050/ to view the timelines. Use the dropdown menus on the left to select the source, version, and metric you want to see.

|

File names

DQM histogram files - created by the art-processes during pass1 and pass2 will have a specific pattern:

data_tier.owner.DESC-DQM.CAMPAIGN-VSTRING-AGGREGATION.seq.root

- the names, such as the data_tier, owner, sequencer, and file format should follow the standard file name pattern

- DESC would typically represents a logical stream of files written by the DAQ, expected to be named as a dataset, and fed into the offline processing. This field is expected to be something like "ele" or "cosmic". Note that the string DQM is always in the description

- CAMPAIGN is the descriptor for the DAQ setup which produced this DQM plots, for example, CRVWB for the CRV wideband setup, and CRVWBA for the CRV-VST setup.

- VSTRING currently has three components - pversion-cversion-iversion

- AGGREGATION allows for the fact that smaller DQM files are likely to be added together, so the, say, 20 files that go into a stream during a run can be added together and a single DQM result can be recorded for the whole run. Some aggregation key words might be "file" for a single file, or "run" or "week".

Foe example, the offline DQM file created for the initial CRV-wideband test has the format

ntd.mu2e.CRV_wideband_cosmics-DQM.CRVWB-000-009-000-file.002098_001.root

Metrics Database

The database is used to record numerical metrics derived by the extractors from a DQM histogram file. These metrics can then be plotted in timelines. Each entry needs to know

- the source of the metrics. Logically this is the combination of "process, stream, aggregation, version".

- the relevant run period and/or time period for the metrics.

- the values of the metrics

The source values can be derived directly from the standard DQM file name, so it will be very useful to maintain this pattern. If the source is not a file with a standard name, it can be represented by the equivalent 4 words. The more these words are standardized the more straightforward it will be to organize and search for the metrics. They shoudl be treated as case-sensitive, and all should be lower-case.

We expect that timelines can usually be adequately plotted using only the run or time of the start of the period when the metric is relevant. For example, a DAQ file might contain run 100000, subrun 0 to subrun 100. The follow file might represent subruns 101 to 150. The metrics extracted from these file could be plotted at the points 100000:0 and 100000:101. However, the database allows for the start and stop of the relevant period to be recorded. So the relevant period first file can be represented by the run range 100000:0-100000:100, using the standard run range format. We can also enter the start and stop times of the relevant period. The entry requires at least a start to the run range OR a start to the time period. The end run range and end time are optional. Both run range and time period may be present.

The numerical metric is labeled by three fields: "group, subgroup ,name". For example, for the metrics derived from histograms made in pass1, the groups might be "cal", "trk", or "crv". The subgroups might be "digi", "track", or "cluster". The "name" might be "meanEnergy", "rmsEnergy", "zeroERate". While it is possible to write a metric name like "Average momentum (MeV) for tracks with p>10 and MeV 20 hits and cal cluster E>10.0 MeV", ultimately this will be more annoying than helpful. If short names need to be documented, it is probably best to do that in a parallel system, not in the database name. No commas are allowed (csv format is used internally), and some other special characters might also fail.

The numerical metric is represented by a float for the value, a float for its uncertainty (0.0 if N/A) and an integer. The values of the integer are determined by an enum in DQM/inc/DqmValue.hh. Zero is normal, success.

If a source and interval can apply to many metrics begin entered, the metrics can be listing, one per line, in a text file and committed in one command.

In this example, an extractor has produced a set of metrics in a text file. The extract was run on a properly-named histogram file. The run and subrun of the start of the period is taken from the file name. The time range will be null.

cat myvalues.txt cal,digi,meanE,0.125,0.001,0 cal,digi,fracZeroE,0.001,0.0,0 cal,cluster,meanE,25.2,0.12,0 cal,cluster,rmsE,2.2,0.25,0

dqmTool commit-value \ --source ntd.mu2e.DQM_ele.pass1_file_0.100000_00000100.root \ --value myvalues.txt

A single value can be committed with text:

dqmTool commit-value \ --source ntd.mu2e.DQM_ele.pass1_file_0.100000_00000100.root \ --value "cal,digi,meanE,0.125,0.001,0"

and the source can be explicit if it is not a file.

dqmTool commit-value \ --source "valNightly,reco,day,0" \ --value myvalues.txt

The commit can contain full period information. Times are in ISO 8601 format. If time zone is missing, the current time zone will be taken from the computer running the exe, and will be saved in the database in UTC.

dqmTool commit-value \ --source ntd.mu2e.DQM_ele.pass1_file_0.100000_00000100.root \ --runs "100000:100-100000:999999" \ --start "2022-01-01T16:04:10.255-6:00" \ --end "2022-01-01T22:32:44.908-6:00" \ --value myvalues.txt

To read the database, list the known sources of metrics

> dqmTool print-sources ... 5,valNightly,reco,day,0 ...

The initial integer is the database lookup index, or "SID" of this source. The other fields are "process, stream, aggregation, version".

List the known values (metrics) names

> dqmTool print-values ... 6,ops,stats,CPU

The first integer is the database lookup index of the value name and the rest are the value's "group, subgroup ,name".

Now list all the known intervals (not too useful)

> dqmTool print-intervals | head 45,4,0,0,0,0,2022-01-23 00:01:00-06:00,2022-01-23 00:01:00-06:00 ...

The first integer is the database lookup index or "IID" of each interval, the second is the SID of the source for the interval. The next 4 ints are the run and subrun range, if applicables, the last two fields are start and stop times, if applicable.

List all the numerical metrics for source SID 5 and value VID 6 dqmTool print-numbers --source 5 --value 6 --heading The last three numbers are the number, its uncertainty, and the status code.

Or, in more readable format

> dqmTool print-numbers --source 5 --value 6 --heading --expand | tail 45214,1095,150.0,0 5,valNightly,reco,day,0 6,ops,stats,CPU 7549,5,0,0,0,0,2024-07-24 00:01:00-05:00,2024-07-24 00:01:00-05:00

The metric here is 1095+-150, code 0.