ProductionProceduresMC

POMS MC Production Guide

Introduction

This guide describes how to run Monte Carlo (MC) production using POMS. Jobs fall into two categories:

Base-template jobs

- Definition: Process a single input file to produce one output via a standard FCL template.

- Driver:

Production/Scripts/run_RecoEntuple.py(consider renaming torun_DigiReco.py). - Output storage: Write results to persistent storage to avoid many small tape files; later concatenate before archiving.

- Examples: digitization, reconstruction, event-ntupling.

- Example campaign: POMS Campaign 24194

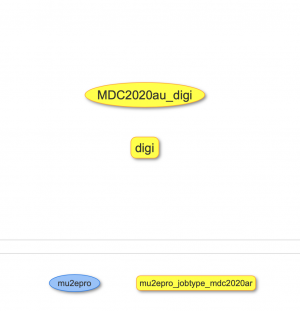

Stage Parameter Overrides

Param_Overrides = [ ['-Oglobal.dataset=', '%(dataset)s'], ['--stage=', 'digireco_digi_list'], ['-Oglobal.release_v_o=','au'], ['-Oglobal.dbversion=', 'v1_3'], ['-Oglobal.fcl=', 'Production/JobConfig/digitize/OnSpill.fcl'], ['-Oglobal.nevent=', '-1'], ]

%(dataset)s– placeholder for POMS slice names (e.g.dts.sophie.ensembleMDS2a.MDC2020at.art_slice_72935_stage_5)digireco_digi_list– stage definition from…/poms_includes/mdc2020ar.cfg- Remaining overrides feed into

run_RecoEntuple.py

Split Types

There are multiple split types in POMS, but we've been most using drainingn and nfiles

draining(n)– pulls at mostnfiles per iteration and tracks delivered files via snapshots.

To modify campaign, the preferred option is to use GUI editor on the main page, which will bring you the below:

Then double click on digi cell to modify campaign parameters

Extended-template jobs

- Definition: Require unique, job-specific parameters and configurations.

- Examples: stage-1 processing, stage-2 resampling, mixing.

- Example campaign: POMS Campaign 24200

Stage-1

We generate stage-1 datasets without input datasets. We needs an index to produce the output of either POT interaction and cosmics. The index is provided by index datasets - see below.

Generate par (cnf*tar) file with json2jobdef.sh

Example:

mu2einit source /cvmfs/mu2e-development.opensciencegrid.org/museCIBuild/main/37d0e483_36ce03c5_b63b18df/setup.sh setup dhtools setup mu2egrid json2jobdef.sh --json $MUSE_WORK_DIR/Production/data/stage1.json --desc PiBeam

Sample JSON entry:

{

"desc": "PiBeam",

"dsconf": "MDC2020av",

"fcl": "Production/JobConfig/beam/POT_infinitepion.fcl",

"fcl_overrides": {

"services.GeometryService.bFieldFile": "\"Offline/Mu2eG4/geom/bfgeom_no_tsu_ps_v01.txt\""

},

"njobs": 10000,

"events": 100000,

"run": 1201,

"simjob_setup": "/cvmfs/mu2e.opensciencegrid.org/Musings/SimJob/MDC2020av/setup.sh"

}

, and essentially sets the parameters for json2jobdef.sh

Produces:

cnf.mu2e.PiBeam.MDC2020av.0.tar cnf.mu2e.PiBeam.MDC2020av.0.fcl

fcl file above is intended for testing.

After the test, upload par file to disk:

json2jobdef.sh --json $MUSE_WORK_DIR/Production/data/stage1.json --desc PiBeam --pushout

json files are located in Production/data, and can be investigated as such

Merging

Example:

json2jobdef.sh --json $MUSE_WORK_DIR/Production/data/merge_filter.json --desc ensembleMDS1eOnSpillTriggered-noMC

json file looks like:

{

"desc": "ensembleMDS1eOnSpillTriggered-noMC",

"dsconf": "MDC2020au_best_v1_3",

"fcl_overrides": {

"physics.trigger_paths": [],

"outputs.strip.fileName": "\"dig.owner.dsdesc.dsconf.seq.art\""

},

"extra_opts": "--override-output-description",

"fcl": "Production/JobConfig/digitize/StripMC.fcl",

"input_data": "dig.mu2e.ensembleMDS1eOnSpillTriggered.MDC2020aq_best_v1_3.art",

"merge_factor": 1,

"simjob_setup": "/cvmfs/mu2e.opensciencegrid.org/Musings/SimJob/MDC2020au/setup.sh"

}

Produces:

cnf.mu2e.ensembleMDS1eOnSpillTriggered-noMC.MDC2020au_best_v1_3.0.tar cnf.mu2e.ensembleMDS1eOnSpillTriggered-noMC.MDC2020au_best_v1_3.0.fcl

Primaries

We resample primary from particle stops.

Using same script Example:

json2jobdef.sh --json $MUSE_WORK_DIR/Production/data/resampler.json --desc RPCExternal

json file looks like:

{

"desc": "RPCExternal",

"dsconf": "MDC2020as",

"fcl": "Production/JobConfig/primary/RPCExternal.fcl",

"fcl_overrides": {

"services.GeometryService.bFieldFile": "\"Offline/Mu2eG4/geom/bfgeom_no_tsu_ps_v01.txt\""

},

"resampler_name": "TargetPiStopResampler",

"input_data": "sim.mu2e.PiminusStopsFilt.MDC2020ak.art",

"njobs": 2000,

"events": 1000000,

"run": 1202,

"simjob_setup": "/cvmfs/mu2e.opensciencegrid.org/Musings/SimJob/MDC2020as/setup.sh"

}

Produces:

cnf.mu2e.RPCExternal.MDC2020as.0.tar cnf.mu2e.RPCExternal.MDC2020as.0.fcl

Index datasets

Extended-template job type run of the index datasets as such: $ samdes idx_map042425.txt Definition Name: idx_map042425.txt

Definition Id: 208459

Creation Date: 2025-04-25T15:58:50+00:00

Username: oksuzian

Group: mu2e

Dimensions: dh.dataset etc.mu2e.index.000.txt and dh.sequencer < 0003892

The definitions are created from a list of par files like:

cnf.mu2e.MuonIPAStopSelector.MDC2020at.tar -1 cnf.mu2e.RMCInternal.MDC2020at.tar 2000 cnf.mu2e.RMCExternal.MDC2020at.tar 8000 cnf.mu2e.IPAMuminusMichel.MDC2020at.tar 2000 cnf.mu2e.CeMLeadingLog.MDC2020at.tar 2000 cnf.mu2e.CePLeadingLog.MDC2020at.tar 2000 cnf.mu2e.DIOtail95.MDC2020at.tar 2000

Where the first column are the parameter files definitions, and the second column are the number of jobs (-1 means the number of jobs can be extracted from the par file itself)

Then using the list above, we can create a definition:

gen_MergeMap.py /exp/mu2e/data/users/oksuzian/poms_map/map041025.txt

run_JITfcl.py

This script drives extended-template job types of the index definitions. On the grid it:

- Extracts the parameter filename and local index from the map, i.e. /exp/mu2e/data/users/oksuzian/poms_map/merged_map042425.txt

- Download par file, and extracts fcl file

- Runs and pushOut all the relevant output: art, root, log

Monitoring

- Recently produced datasets:

listNewDatasets.sh - Official datasets: MDC2020#Current_Datasets

These webpage are geneted by nightly cron jobs:

/exp/mu2e/app/home/mu2epro/cron/datasetMon/

Running jobs locally

Both drivers can run locally by providing proper variables like:

setup mu2egrid export fname=etc.mu2e.index.000.0000000.txt ...whatever else the script complains about